Why Should You Run Kafka On Kubernetes?

Kafka is a distributed streaming platform designed to handle massive amounts of data at high throughput rates. It was initially developed by LinkedIn and released as open-source software in 2011. Kafka is written in Java and uses Apache’s Hadoop ecosystem to manage its cluster. Kafka is built on top of Zookeeper, a centralized service that provides reliable coordination among nodes in a distributed system.

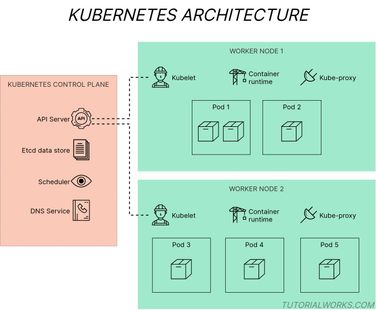

Why you should run Kafka on Kubernetes? Running Kafka on Kubernetes is relatively simple. You can use any containerization tool to create containers based on the official image provided by the Kafka team. Once you have your containers running, you need to configure them to connect to each other using a secure connection called TLS. You then need to set up the Kafka brokers to accept connections from clients. Finally, you must ensure your clients know how to connect to the brokers.

Kafka is a distributed streaming platform that was originally created by LinkedIn and is now maintained by the Apache Software Foundation (ASF). Kafka runs on top of two well-known operating systems – Linux and Windows. Kafka provides a simple API that enables users to create custom applications using its core functionality.

What is Kafka?

Kafka is basically a messaging system that is built around the concept of streams. A stream is nothing but a series of messages that are sent between different nodes. These nodes could be servers, clients, mobile phones, IoT devices, etc.

The message is divided into fixed-sized chunks called records. Each record contains metadata about the content of the message and the sender’s identity.

Why use Kafka?

There are many reasons why you should consider using Kafka. One of them is that it is extremely scalable, fault-tolerant, and highly reliable. It uses a masterless architecture which means that there is no single point of failure. It also helps in reducing operational costs since there is no need for any kind of monitoring or management tools.

Another reason why you should consider using it is that it supports real-time processing. You can build applications that can react immediately to changes in the messages coming in.

You can also use Kafka to store logs and events. Logs are just a collection of information regarding how things have gone down. Events are those items that occur in the past. An example would be if someone sends a payment request then you might want to log that event.

How does Kafka work?

Kafka works on the principle of partitioning. In order to understand what this means let us first look at some examples. If you have a group of people who play football and they want to know whether their team won or lost, they would ask their friends if they saw their favorite team playing.

If there were 100 people in the group and 20 of them were interested in knowing whether their team won or not, they would each send a message to their friend asking him/her to tell them whether his/her team won. The person receiving the message would simply reply ‘yes’ or ‘no’ depending on whether their team won or didn’t win.

Now imagine that these 100 people wanted to know whether their team had won or lost. Instead of sending separate messages to all of their friends, they decided to make a collective decision. So instead of having 100 individual messages, they would only have 1 message.

This same thing applies to Kafka. Imagine that we have 10 brokers and 1000 partitions. Now say that we have 1000 producers that produce messages. We will still only have 1 message. But the difference lies in the fact that instead of having 10,000 individual messages, we will have